The Rising Energy Demands of Generative AI and the Promise of Neuromorphic Computing

Potential Solutions for Data Centre Energy Consumption

Generative artificial intelligence (GenAI) technologies have significantly increased global data centre energy consumption. The expansion of GenAI workloads influences both the design and geographical distribution of data centres and has implications for power infrastructure across the globe.

Generative AI’s Energy Consumption

In 2024, data centres were estimated to consume 415 terawatt-hours (TWh) of electricity, which accounts for approximately 1.5% of the world’s total electricity use. A substantial fraction of this increase, projected to grow at an annual rate of 12–15 %, is attributed to the growth of GenAI workloads. Although AI activities recently accounted for less than 15% of data centre energy consumption, projections indicate that this figure may reach 49% by the end of 2025.

This trend is mainly due to the specialised high-density servers required for training and deploying large-scale AI models. Such servers, which frequently utilise advanced graphics processing units, consume five to fifteen times more power per rack than traditional hardware. Consequently, new hyperscale data centres are being constructed with power capacities exceeding 100 megawatts, which is comparable to the requirements of smaller municipalities.

Implications for Power Infrastructure

The International Energy Agency (IEA) projects that global data centre electricity demand could exceed 945 TWh by 2030, more than doubling current levels, with GenAI identified as the primary factor. This rapid and concentrated increase in demand poses challenges for local power grids, and analysts have suggested that up to 40% of AI data centres may encounter operational limitations owing to electricity shortages by 2027.

Advantages of Neuromorphic Computing

In response to sustainability concerns, neuromorphic computing has emerged as a potential alternative to traditional architectures. Neuromorphic computing systems, inspired by biological neural networks, integrate memory and computation within interconnected artificial neurones and synapses, which fundamentally differ from conventional designs that separate these functions.

These systems employ spiking neural networks (SNNs) that process information solely in response to distinct events rather than operating continuously. This event-driven, asynchronous model is up to 100 times more energy-efficient than conventional systems, supporting real-time learning and adaptability. The distributed nature of information storage in these architectures contributes to their inherent fault tolerance.

Figure 1. Example architecture of SNNs (source: Maciag et al, 2022)

Barriers and Outlook

Despite their promise, neuromorphic computing technologies face several challenges, including immature software ecosystems, inadequate industrial standards, high development costs, and limited accessibility. Existing solutions, such as GPUs and application-specific integrated circuits, remain prevalent, further complicating the widespread adoption of neuromorphic alternatives.

Nonetheless, ongoing advancements in neuromorphic hardware and software, along with increasing attention to the energy requirements of AI infrastructure, are driving progress in this field. Neuromorphic computing is particularly well-suited for edge AI and Internet of Things devices, where minimal power consumption and on-device learning capabilities are crucial.

In Conclusion

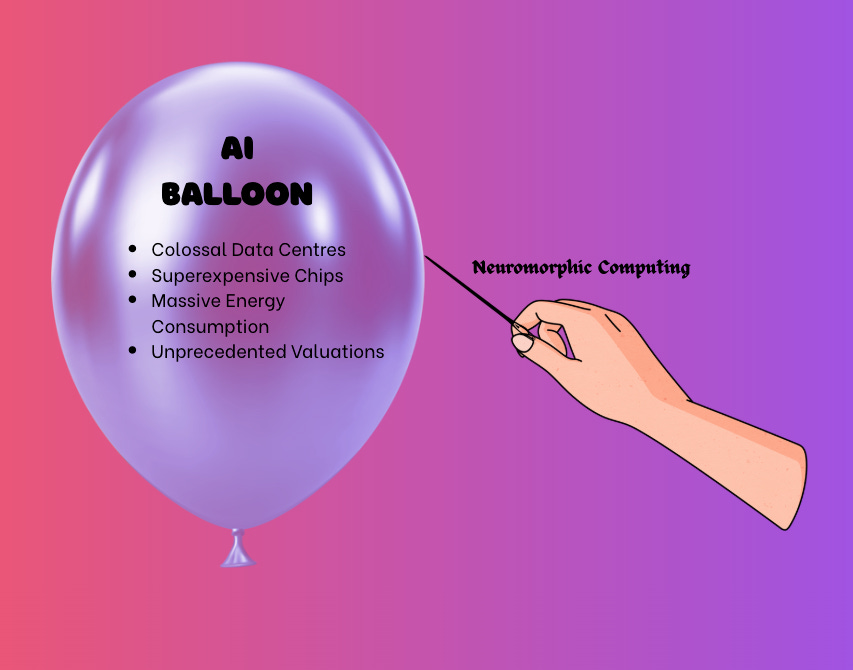

Figure 2. Will neuromorphic computing burst the Gen AI balloon?

The growing energy demands associated with generative AI necessitate a reevaluation of the underlying data centre technologies. Neuromorphic computing presents a viable path toward reduced power consumption while enabling enhanced adaptability in AI systems. Continued research and development may establish neuromorphic systems as critical components in the sustainable evolution of artificial intelligence.