The Silicon Ceiling: Why AI Must Ditch Quadratic Scaling and Embrace the Biological Blueprint

The Reigning Giants: Neural Networks and the Transformer

The unprecedented leap in Artificial Intelligence (AI) over the last decade is largely attributable to one foundational architecture, the Neural Network (NN). Inspired by the structure of the human brain, NNs are composed of interconnected nodes (neurons) organised in layers. These nodes receive input, apply a weight and an activation function, and pass the result to the next layer. While early NNs like Feedforward Neural Networks and Convolutional Neural Networks (CNNs) (developed specifically for image tasks) were groundbreaking, they were soon joined by Recurrent Neural Networks (RNNs), designed to model sequential data using connections across time steps. The Transformer architecture is now the primary model used in AI, particularly for language models such as BERT and GPT.

A Generational Leap

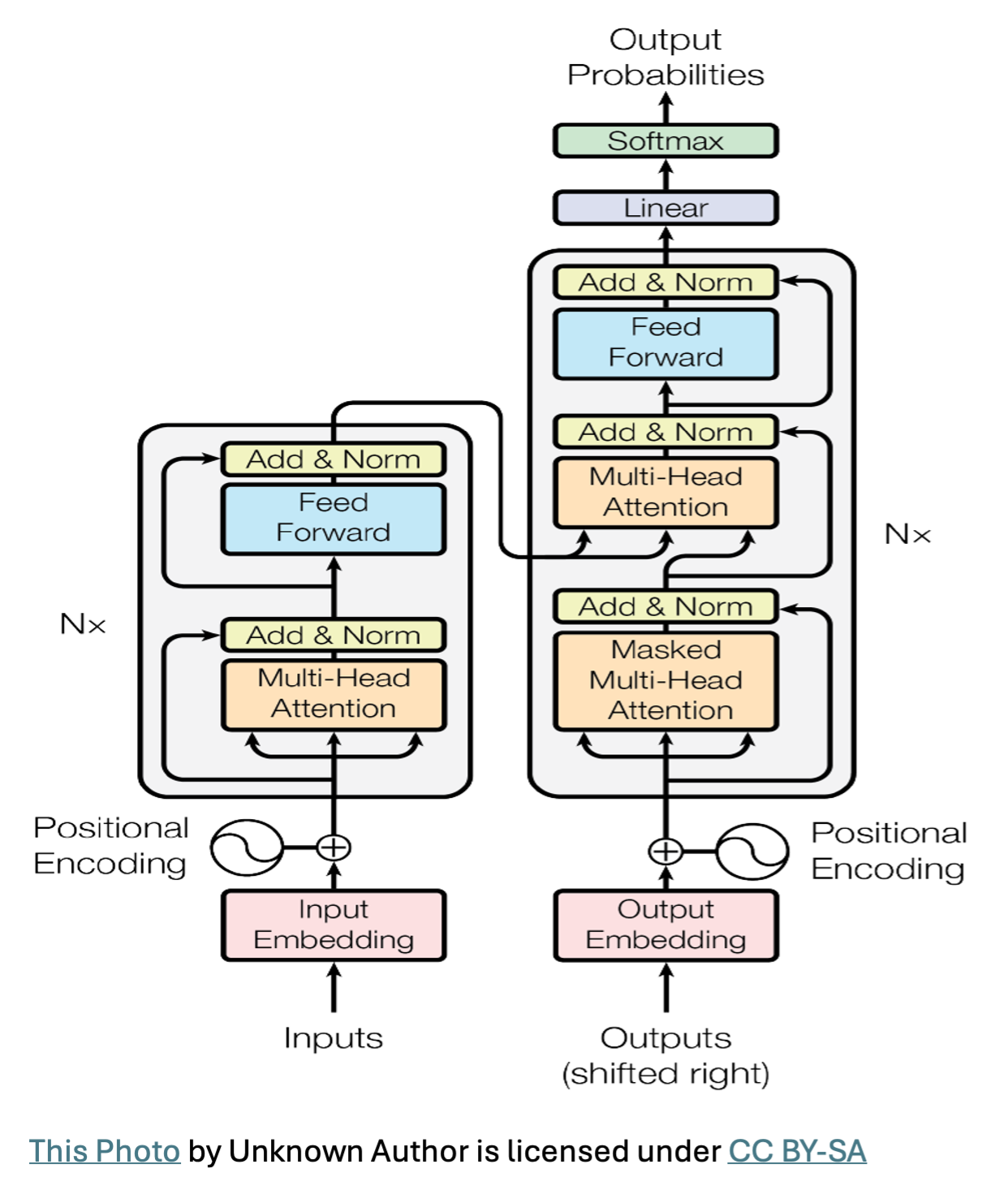

Transformers represent a significant advancement because they efficiently handle sequential data, primarily text, by moving away from purely recurrent or convolutional designs. Their core innovation is the attention mechanism, which models relationships between all elements in a sequence, regardless of their physical distance from one another.

Key components of this powerful design include:

Multi-Head Self-Attention: This mechanism allows each token to “attend” to every other token, effectively capturing dependencies and context across the sequence.

Positional Encoding: Since the Transformer structure is not inherently sequential, this component adds crucial information about the token order.

The overall structure typically follows an Encoder-Decoder architecture, processing input sequences and generating output sequences.

While this architecture has enabled massive success, it has simultaneously introduced fundamental and unsustainable constraints.

The Bottleneck: Significant Limitations of Current Architectures

Recent studies and technical reports published around 2025 highlight that, despite significant advancements, transformer-based and neural network models still possess substantial technical, operational, and conceptual limitations. These constraints threaten the field’s ability to achieve true Artificial General Intelligence (AGI) and sustainable scaling:

High Computational and Resource Cost: Training state-of-the-art transformer models requires extraordinary amounts of compute power, memory, time, and financial capital. This resource demand makes these models largely inaccessible for smaller organisations, creating a substantial environmental footprint due to high energy usage.

Limited Long-Range Sequence Handling: Although transformers excel at moderate-length sequences, their performance severely declines when dealing with extremely long data streams. This is because attention mechanisms scale quadratically, making very long context processing prohibitively costly and impractical.

Dependence on Large, High-Quality Datasets: These deep neural networks demand massive volumes of carefully curated and annotated data. If the datasets are biased or inadequate, the resulting outputs are unreliable and potentially unfair, particularly in sensitive areas such as healthcare and finance.

Interpretability & Black Box Nature: Deep neural networks and transformers often lack transparency, making their internal reasoning difficult to explain. This opacity raises serious concerns regarding trust, accountability, and regulatory compliance in high-stakes applications.

Scaling Plateau and Diminishing Returns: Leading research labs have reported that simply scaling existing models larger is now yielding diminishing improvements, suggesting that fundamental architectural limits are being reached.

Stability Issues: Transformers can be sensitive during training, prone to collapse, non-convergence, or instability without elaborate tuning.

The Necessity of Biological Inspiration: Moving Beyond the Silicon Ceiling

If AI is to ensure advancements, improve energy efficiency, and progress toward AGI, it must explore fundamental alternatives that overcome the quadratic scaling problem. A clear shift is now underway toward efficient, robust, and interpretable alternatives. One of the most promising avenues involves Brain-Inspired Computational Architectures. This paradigm shifts away from traditional von Neumann architectures toward models that mimic the structure and function of biological neural systems. Modern brain-inspired computing systems prioritise principles such as event-driven processing, which enables sparse and asynchronous computation, and local plasticity, where learning occurs through local synaptic modifications rather than global backpropagation.

Brain-Inspired Architectures: Examples and Details

Brain-inspired approaches, primarily focused on neuromorphic hardware and specialised network models, exhibit measurable advantages in energy efficiency, temporal processing, and adaptive learning.

1. (SNNs)

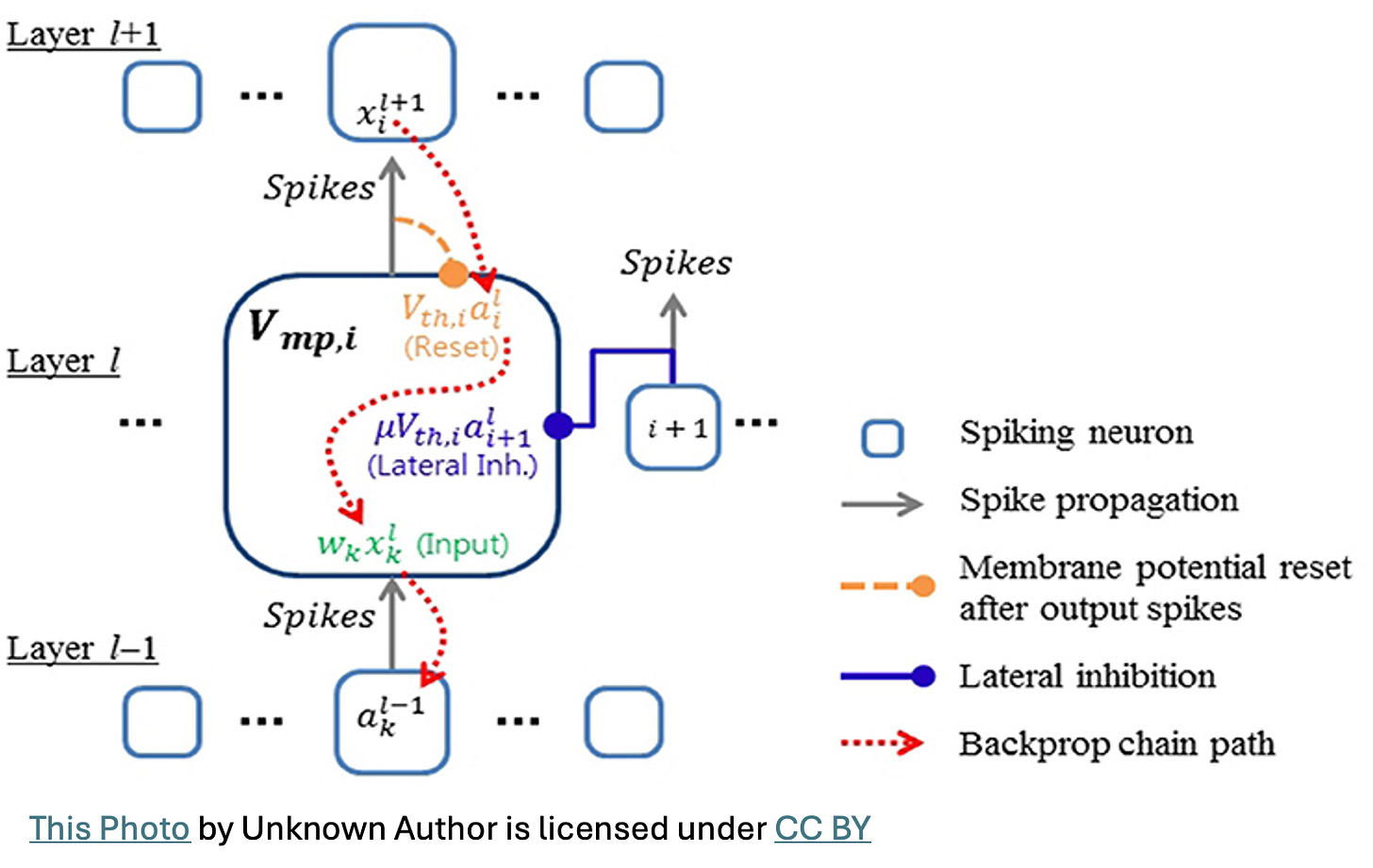

SNNs are a predominant focus in emulating brain-like function. In SNNs, information is encoded as discrete, time-dependent spikes.

Biological Plausibility: SNNs mimic synaptic dynamics and deliver processing that is both energy-efficient and adaptive. Spiking dynamics are emulated in all examined studies focusing on these models.

Software and Architectural Advances: Sophisticated frameworks, such as SpikingJelly (a PyTorch-compatible toolkit offering up to 11x training speedups), have emerged. Researchers are even integrating attention mechanisms into SNNs, resulting in innovations like the Spike-driven Transformer V2, which achieves competitive results on benchmarks like ImageNet.

2. Neuromorphic Hardware Platforms

These platforms implement the principle of co-locating memory and computation to minimise data movement, significantly reducing energy usage and latency. They offer quantitative evidence of exceptional energy efficiency relative to traditional digital systems.

IBM TrueNorth: This hardware prototype implements a tile-based architecture containing 1 million neurons and 256 million synapses across 4,096 cores. It demonstrated real-time video processing at 63 milliwatts, achieving energy usage up to 176,000 times lower per event than conventional simulators.

Intel Loihi: Featuring 128 neuromorphic cores, Loihi supports various neuron models and demonstrates advantages in real-time processing for event-driven tasks.

SpiNNaker: Provides a massively parallel architecture using 1 million ARM cores designed for large-scale, real-time neural simulation.

3. Novel Device Technologies

Innovations in materials and devices are crucial for system efficiency:

Memristive Devices: These are utilised for in-memory computing, providing substantial energy benefits by eliminating the energy cost of transferring data between memory and processing units. Memristive crossbar arrays are studied for their potential in massive parallelism and analog computation.

Liquid Neural Networks, developed at MIT and other labs, can adapt their internal dynamics in real-time, promoting robustness and efficiency in dynamic environments.

A Call for Research and Investment

The field of brain-inspired computational architecture has matured significantly between 2020 and 2025, demonstrating strong performance in domains that require event-driven processing, temporal sequence handling, and energy efficiency. However, the field faces several critical challenges that require concerted research and investment:

Bridging the Gap: A persistent disconnect exists between algorithm development and hardware constraints, alongside fragmentation across multiple incompatible software toolchains.

Standardisation: The lack of widely accepted standardised benchmarks and inconsistent energy measurement methodologies hampers fair comparisons and reproducibility.

On-Chip Learning: Most current neuromorphic hardware requires offline training, which limits its adaptability and flexibility.

Future success hinges on targeted technical priorities, including:

Developing Robust On-Chip Learning algorithms that enable reliable synaptic plasticity directly on neuromorphic hardware.

Implementing Cross-Platform Standardisation (such as expanding the Neuromorphic Intermediate Representation, NIR) to allow seamless deployment across diverse platforms.

Promoting End-to-End Co-Design, optimising the entire system stack from physical devices to high-level applications for maximal efficiency gains.

By investing in these brain-inspired frontiers, researchers can move beyond the computational and scalability limits of the current transformer generation, paving the way for truly adaptive, energy-efficient, and biologically plausible AI systems.

References

Clark, D. G., Livezey, J. A., Chang, E. F., & Bouchard, K. E. (2018). Spiking Linear Dynamical Systems on Neuromorphic Hardware for Low-Power Brain-Machine Interfaces. arXiv preprint.

Dulundu, A. (2024). Advancing Artificial Intelligence: The Potential of Brain-Inspired Architectures and Neuromorphic Computing for Adaptive, Efficient Systems. Next Frontier For Life Sciences and AI, 8(1), 53. https://doi.org/10.62802/tfme0736

Fang, W., Wu, M., Ding, J., Zhou, Y., Xie, H., Chen, R., Li, Y., Wu, X., Tian, Z., Wang, C., & Yu, Z. (2023). SpikingJelly: An open-source machine learning infrastructure platform for spike-based intelligence. Science Advances. https://doi.org/10.1126/sciadv.adi1480

Fair, K. L., Akopyan, F., Taba, B., Cassidy, A. S., Sawada, J., & Risk, W. P. (2019). Sparse Coding Using the Locally Competitive Algorithm on the TrueNorth Neurosynaptic System. Frontiers in Neuroscience. https://doi.org/10.3389/fnins.2019.00754

Fu, K., Wang, Y., Zhu, J., Rao, M., Yang, Y., & Li, H. (2020). Reservoir Computing with Neuromemristive Nanowire Networks. In International Joint Conference on Neural Networks (IJCNN) (pp. 1–8). https://doi.org/10.1109/IJCNN48605.2020.9207727

Gandolfi, D., Mapelli, J., & Puglisi, F. M. (2025). Editorial: Brain-inspired computing: from neuroscience to neuromorphic electronics for new forms of artificial intelligence. Frontiers in Neuroscience, 19. https://doi.org/10.3389/fnins.2025.1565811

Hong, C., Wang, Y., Li, M., Li, Z., Sun, Z., & Duan, L. (2024). SPAIC: A Spike-Based Artificial Intelligence Computing Framework. IEEE Computational Intelligence Magazine. https://doi.org/10.1109/mci.2023.3327842

Li, G., Deng, L., Tang, H., Pan, G., Tian, Y., Roy, K., & Maass, W. (2024). Brain-Inspired Computing: A Systematic Survey and Future Trends. Proceedings of the IEEE, 112(6), 544–584. https://doi.org/10.1109/jproc.2024.3429360

Li, X., Sun, X., Shu, L., & Wang, K. (2022). Applying Neuromorphic Computing Simulation in Band Gap Prediction and Chemical Reaction Classification. ACS Omega. https://doi.org/10.1021/acsomega.1c04287

Mehonic, A., Sebastian, A., & Rajendran, B. (2020). Memristors—From in-memory computing, deep learning acceleration, and spiking neural networks to the future of neuromorphic and bio-inspired computing. Advanced Intelligent Systems. https://doi.org/10.1002/aisy.202000085

Merolla, P. A., Arthur, J. V., Alvarez-Icaza, R., Cassidy, A. S., Sawada, J., Akopyan, F., Jackson, B. L., Imam, N., Guo, C., Nakamura, Y., Brezzo, B., Vo, I., Esser, S. K., Appuswamy, R., Taba, B., Amir, A., Flickner, M. D., Risk, W. P., Manohar, R., & Modha, D. S. (2014). A million spiking-neuron integrated circuit with a scalable communication network and interface. Science, 345(6197), 668–673. https://doi.org/10.1126/science.1254642

Pan, W., Zhao, F., Zhao, Z., & Zeng, Y. (2024). Brain-inspired Evolutionary Architectures for Spiking Neural Networks. IEEE Transactions on Artificial Intelligence. https://doi.org/10.1109/tai.2024.3407033

Parhi, K. K., & Unnikrishnan, N. K. (2020). Brain-Inspired Computing: Models and Architectures. IEEE Open Journal of Circuits and Systems, 1, 185–204. https://doi.org/10.1109/ojcas.2020.3032092

Pedersen, J. E., Aimar, A., Pless, B., Davies, M., Delorme, S., Hauris, S., Hirayama, T., Hussain, A., Lensen, F., Moin, S. T., Mulas, F., Perin, F., Plesser, H. E., Pols, T., Protopopov, I., Savel’ev, A., Schürmann, F., Stöckmann, B., Thabouret, L., & Wijnholds, E. (2024). Neuromorphic intermediate representation: A unified instruction set for interoperable brain-inspired computing. Nature Communications. https://doi.org/10.1038/s41467-024-52259-9

Rathi, N., Chakraborty, I., Kosta, A. K., Ankit, A., Panda, P., & Roy, K. (2022). Exploring Neuromorphic Computing Based on Spiking Neural Networks: Algorithms to Hardware. ACM Computing Surveys. https://doi.org/10.1145/3571155

Schuman, C. D., Potok, T., Patton, R., Birdwell, J., Dean, M. E., Rose, G., & Plank, J. (2017). A Survey of Neuromorphic Computing and Neural Networks in Hardware. arXiv.Org.

Xia, Q., & Yang, J. J. (2019). Memristive crossbar arrays for brain-inspired computing. Nature Materials, 18(4), 309–323. https://doi.org/10.1038/s41563-019-0291-x

Yang, Y., Wang, Z., Li, Y., Wu, J., Zhang, Q., Li, H., & Zhai, J. (2022). A Perovskite Memristor with Large Dynamic Space for Analog-Encoded Image Recognition. ACS Nano. https://doi.org/10.1021/acsnano.2c09569

Yao, M., Li, Z., Sun, Y., Luo, S., Liu, C., & Fan, D. (2024). Spike-driven Transformer V2: Meta Spiking Neural Network Architecture Inspiring the Design of Next-generation Neuromorphic Chips. preprint.

Yu, Q., Zeng, Y., Li, Z., Liu, D., Hu, Z., Liu, S., Li, Y., Tang, H., & Hu, X. (2022). Synaptic Learning With Augmented Spikes. IEEE Transactions on Neural Networks and Learning Systems. https://doi.org/10.1109/tnnls.2020.3040969

Zhang, T., Yang, K., Xu, X., Cai, Y., Yang, Y., & Huang, R. (2019). Memristive Devices and Networks for Brain‐Inspired Computing. Physica Status Solidi (RRL) – Rapid Research Letters, 13(8). https://doi.org/10.1002/pssr.201900029

Zhang, Y., Wang, Z., Zhu, J., Yang, Y., Rao, M., Song, W., Zhuo, Y., Zhang, X., Cui, M., Shen, L., Huang, R., & Yang, J. J. (2020). Brain-inspired computing with memristors: Challenges in devices, circuits, and systems. Applied Physics Reviews, 7(1). https://doi.org/10.1063/1.5124027

Zolfagharinejad, M., Alegre-Ibarra, U., Chen, T., Kinge, S., & van der Wiel, W. G. (2024). Brain-inspired computing systems: a systematic literature review. The European Physical Journal B, 97(6). https://doi.org/10.1140/epjb/s10051-024-00703-6